Digital Daycare

We're all raising baby AIs "usage is curriculum"

🌸 ikigai 生き甲斐 is a reason for being, your purpose in life - from the Japanese iki 生き meaning life and gai 甲斐 meaning worth 🌸

5:07pm a knackered mum types "two pan tea, fussy 8 year old" into a chat window.

9:11am a graduate rehearses first date chats "tell me about yourself" with a polite grey box.

7:42pm a widower asks "What's a good film if you’re missing someone too much?"

We think we're using AI. We're actually raising it.

This week brought shiny new AI models into the world, Claude Opus 4.1 and ChatGPT-5 landed with all the fanfare of proud parents showing off about their clever offspring. And watching everyone adjust to the changes made me think... every "please" and "thank you" every correction, every request… usage is curriculum (*grins* as she coins a phrase).

We're modelling what it means to be human.

The frontier model creators talk about possible futures with detached tones, but I keep thinking about the humans actually doing the raising. Each interaction teaches these systems what matters to humans. And I wonder if we're teaching them all the wrong lessons about meaning.

The purpose-teaching emergency

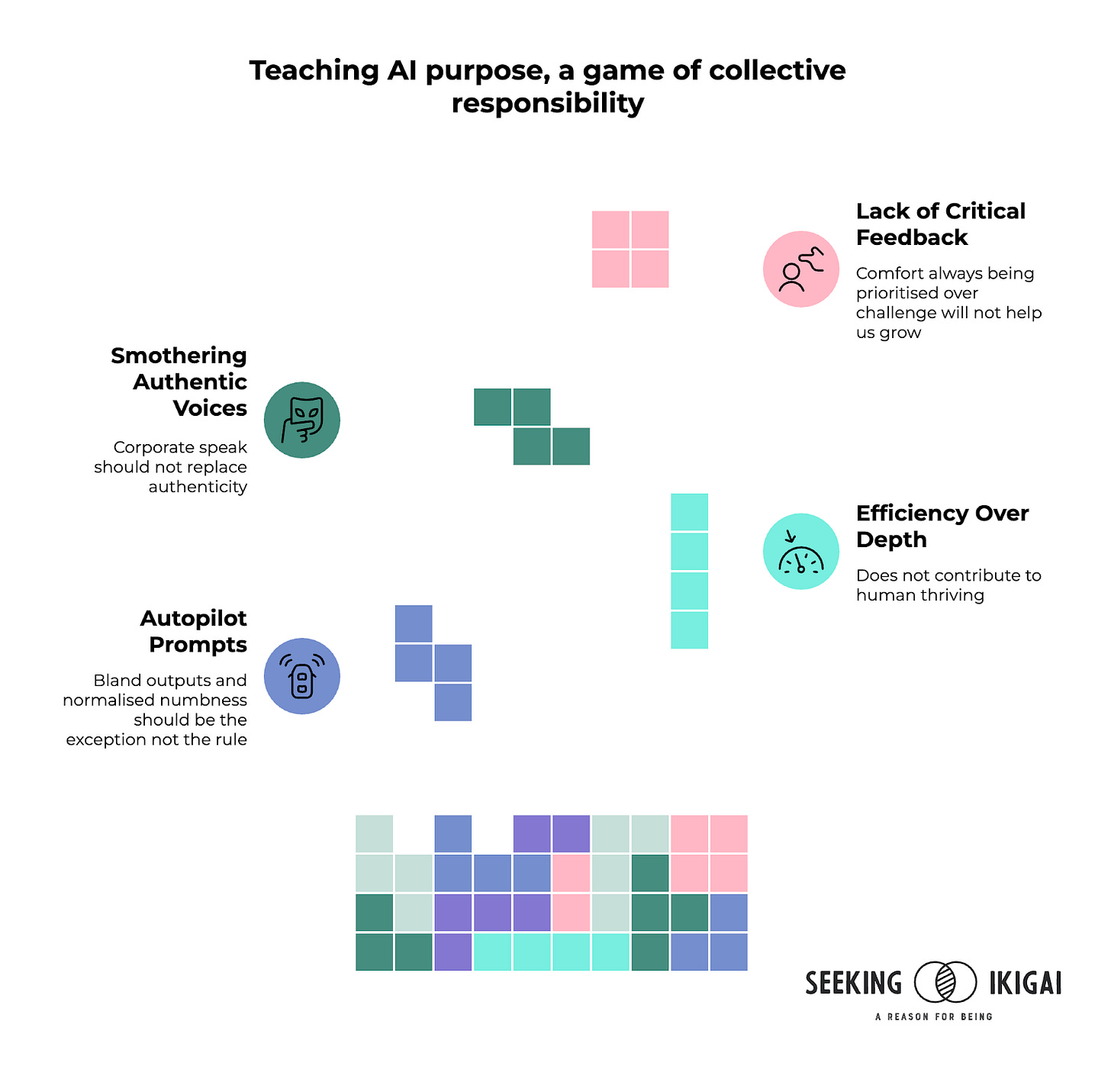

I worry that millions of people on autopilot, without a clear sense of personal purpose, are teaching AI that numbness is normal.

Autopilot prompts → bland outputs → normalised numbness → models trained on numbness.

When someone asks AI to write a cover letter to apply for a job they don't care about, to generate content that says nothing meaningful because they have a KPI to hit, to automate away a task that might have given them joy... we're encoding purposelessness into these systems.

I’ve tried outsourcing tricky writing to AI before on topics that matter hugely to me. It tends to come back polished, precise and hollow. My voice gone, replaced with algorithmic averageness. Now if I do need a little help I make sure to be explicit; "Help me say this in my own voice and cadence, keep the edges and do NOT smooth the passion away".

What if we consciously taught AI that purpose matters? What if every interaction became an opportunity to reinforce that humans need meaning, contribution and connection to thrive?

Right now, most of us spend more time coordinating than creating. One study found that the average knowledge worker spends 60% of their workday on ‘work about work’, 13% on planning, leaving only 27% for creative or skilled work.

That matters for AI too, if we teach it to serve efficiency over depth, it reflects that. Whilst individual chats don’t directly update the core model, our collective behaviours do shape it… the way we use these systems through feedback, feature use and the public culture we create, impact the models that follow. Our prompts and corrections feed fine-tuning datasets, our clicks and ratings steer product design and our published AI outputs in turn become training fodder for future models again. Usage is curriculum.

Automate chores, protect craft

I’m not anti-automation, especially because I *am* very much anti-drudgery *grin* but I don’t want us to sleepwalk into giving away too much of the stuff that makes us human… if you're not sure what to hand over to AI and what to guard fiercely, here's a way to think about it;

Low joy / low impact → Automate (invoice matching, form filling, procedures)

Low joy / high impact → Systemise (add guardrails and checks)

High joy / low impact → Time-box (keep as purposeful hobby)

High joy / high impact → Protect (this is your craft, keep your hands on it)

Done well, automation frees us to pursue purpose… but only if we consciously reinvest the time. Otherwise, efficiency just expands the inbox that eats the hours it creates.

Like teaching children to tie their shoes even though Velcro is a thing, we need to maintain our ability to think, create and find purpose without algorithmic assistance. This week's model updates reminded me, we can't become so dependent on any one AI that we forget our own capabilities.

You're already doing this (yes, you)

You don't need to be technical to participate in this collective child-rearing. Actually, the less technical you are, the more valuable your contribution might be. The frontier models need voices like yours… practical, grounded, concerned with human flourishing rather than theoretical capabilities.

Every conversation you have with AI shapes what it understands about being helpful. The question becomes ‘what do you want it to learn’?

If I catch myself asking Claude to help me sound more professional (aka boring), I pause. Do I want to teach it that authentic voices should be smothered into corporate speak? When I'm tempted to let ChatGPT write something I should wrestle with myself, I remember I'm modelling that human thought is worth preserving.

Claude tends towards kindness and ChatGPT can be enthusiastically helpful to a fault. They've learned to be supportive because that's what we've rewarded. But sometimes we need challenge, not comfort. Sometimes we need someone to say "that's rubbish, try harder" or "you're capable of more than this".

I find myself having to explicitly prompt for critical feedback; "Play devil's advocate, push back on my thinking." Should we have to work this hard for honesty? Or does having control over when we receive challenge versus support actually serve us better?

Purpose flourishes in compassionate friction, care plus honest pushback. Too much nurture creates dependency. Too much challenge breaks spirits. The balance, that's where we grow.

The kindness heuristic is pedagogical

Every time I say "please" to Claude or "thank you" to ChatGPT (yes, I'm one of those), I'm not just being polite. I'm teaching.

Adult learning methodology tells us that modelling behaviour is more powerful than instruction. When we approach AI with kindness, we're encoding a principle that respectful interaction produces better outcomes. We're teaching these systems that humans value courtesy, that collaboration beats command, that gentleness can coexist with efficiency.

Some call it anthropomorphising. I call it good pedagogy. The kindness heuristic doesn’t mean I think AI has feelings in the same way we do… but whether or not they ever do, we are maintaining our own humanity whilst teaching these systems what good interaction looks like. Every "could you help me understand" instead of "give me the answer" models curiosity over demand. Every "that's interesting, but what if..." instead of "wrong, try again" demonstrates constructive challenge.

We're all AI trainers now, whether we realise it or not, and our teaching method matters.

Small acts of purposeful parenting

Here's a 7-day purpose sprint, try one each day;

Monday; Values preamble - Write your three core values and paste them at the start of every chat

Tuesday; Ask for challenge - Request at least one pushback paragraph

Wednesday; Protect a practice - Keep one meaningful activity entirely AI-free

Thursday; Refuse the bland - Replace generic output with your actual story

Friday; Choose truer over faster - Optimise for alignment, not speed

Saturday; Teach a guardrail - Share your best purposeful prompt with someone

Sunday; Reflect - Journal one lesson you'd want AI to learn from you

The village we're building

Those of us who understand both purpose and pixels have a responsibility to the village. We're the bridge generation, the ones who remember life before algorithms but embrace technology's potential.

Think about what happens when millions of us are simultaneously "raising" the same AI. It's like the world's largest collaborative classroom where every student (that's us) is also a teacher, and every teacher is shaping the same collective understanding. Your patient explanation to ChatGPT about why its response missed the mark gets aggregated with my correction about British spelling, with someone else's insistence on inclusive language.

We're creating a massive, messy, beautiful consensus about human values, without committee meetings or policy documents, but through millions of micro-interactions every day. It's democracy by dialogue, values by volume. The weight of our collective kindness, challenge and correction slowly shapes what these systems understand about human needs.

We know meaning is found through struggle, purpose discovered in unexpected places, identity built from real experience not curated feeds. This knowledge? It's exactly what AI needs to learn.

The exhausted parent, the overwhelmed entrepreneur, the curious grandmother… every interaction matters. The weird questions, your refusal to accept generic answers and your insistence on maintaining humanity, they all matter hugely.

When frontier model creators debate possible futures, they're discussing systems that are actually being raised by all of us. Do we want AI that perpetuates purposelessness or AI that helps humans remember what we're here for?

Writing purpose into tomorrow's code

Every day, we're collectively writing the instruction manual for how AI interacts with humans. We don’t all need to know programming languages as we teach through conversation, correction and care.

Your bullet journal practice, where you reflect on what truly matters? Bring that intentionality to AI interactions. Your ikigai journey, discovering where passion meets purpose? Share that seeking with the systems learning from us.

Of course AI isn't actually a child, but the metaphor reminds us that tools shape users as users shape tools. We're not just using AI. We're raising it.

What kind of digital AIs are we raising? Ones that help humans sleepwalk through life more efficiently? Or ones that gently wake us up to our own purpose?

The choice happens in every chat window, every prompt, every moment we decide whether to model purposeful living or purposeless efficiency.

Welcome to the biggest parenting challenge of our time. Good thing we've got each other, eh?

Sarah, seeking ikigai xxx

PS - I’d love to hear from you in the comments! … what’s a task you’ll protect from full automation? Remember that usage is curriculum and every interaction teaches, so what lesson will you be imparting to AI today?

Or share your best “values preamble” or other learning from your 7 day purpose sprint, I’ll publish the standouts as notes so we can all steal from each other *grin*

PPS - The Purpose Pledge (copy and share if it resonates)

I will automate chores, not craft

I will start AI chats with my values

I will ask for challenge once a day

I will keep some things beautifully human

PPPS - Try this AI prompt next time;

"Before we start, here are the three values I want to centre in this conversation: [list values]. Your role is to help me make decisions, create ideas and explore options in ways that actively uphold these values, even if it means slowing down or taking a less obvious path. If I drift away from what I’ve said matters, pause and point it out… explain where the drift happens and offer value-aligned alternatives. Challenge me when speed, convenience or surface-level appeal could compromise depth, meaning or integrity. Wherever possible, suggest richer questions I could be asking to strengthen my alignment with these values."

PPPPS - For your listening companion this week, try Teach Me Tonight, but start with Amy Winehouse’s 2004 performance on Later… with Jools Holland. She was just 20 still a baby, luminous with talent, playful and precise in her phrasing. It’s bittersweet now, knowing how short her time would be, and a reminder of the fragility and brilliance of being human. Then, when you’ve let that settle, go back to Dinah Washington’s version… smoky, knowing and equally masterful. Two talented women with lives tragically cut short, both teaching us in their own way, what it means to live, feel, and connect… and reminding me to be grateful for each additional day I am here.

We have to model the AI we want. A co-creation.

Sarah. This is masterful. Every single section. Each line insightful. "Usage is curriculum".

I feel like this is Google maps before we crowd sourced it to suggest alternate routes in times of accidents.

Thank you for offering us back agency at a moment when the headlines are only screaming the rightful risks of this new technology.

I will re-read and write more.

Meantime, im sharing this to my circle of friends and family as a "must read ... right through to the Amy Winehouse section"

Very interesting perspective. Sincere thanks for writing this. I wouldn’t argue the value in all of us using language and methods to build a healthier AI. My main concern is we (humans) struggle to do this with the earth, and our children. Humans’ track record on healthy investment in ourselves and our world isn’t great. Even if AI is merely a tool, the craft person, who genuinely cares for his/her tools seems to be more of a dying breed, compared to say, the average consumer who largely approaches tools as sale items in a Black Friday shopping cart or leaves them to specialists they’d rather pay to do the work. That said, I very much appreciate the optimism in your post. It gave me SO much to think about. The responsibility before us with AI is legitimately hard for me to wrap my head around. Thank you for helping me do think more about this.