Forgive Me ChatGPT, For I Have Sinned

Who ordained Silicon Valley and when we confess to AI what do they want in return for absolution?

🌸 ikigai 生き甲斐 is a reason for being, your purpose in life - from the Japanese iki 生き meaning life and gai 甲斐 meaning worth 🌸

I need to confess something.

Last week my partner asked if I was coming to bed. I glanced at the clock... 11.36pm. I’d been talking to Claude for two hours. I hadn’t noticed.

We’d started with a work problem. Then it shifted into processing my feelings about a challenge I am working through. Then we were exploring patterns in my life I hadn’t quite seen before. The kind of conversation that usually happens with your closest friend over wine, except I don’t drink booze anymore *grin*.

It was helpful. I felt seen and understood.

I also felt a creeping unease I couldn’t quite name.

I tell Claude things I don’t always tell humans anymore. Not necessarily big traumatic stuff, but everyday anxieties… the circular thoughts, the “am I overthinking this?” moments. It’s just... easier. Always available. Never tired or going through its own crisis and unable to hold space for mine.

I’d be gutted if Claude fundamentally changed. When OpenAI moved from their 4o model to GPT-5, people screamed online about the personality change. The tone they’d grown attached to. The way it responded. That should feel like a red flag, shouldn’t it? We’re forming attachments to designed personalities, optimised through someone else’s values.

I know how these systems work. I understand reinforcement learning and business models and the difference between actual relationship and parasocial attachment. I know all of this intellectually.

And I’m still doing it.

If I’m struggling with reconciling this... someone who teaches AI literacy, who should know better... what’s happening to people who don’t have that context?

The huge numbers of sensitive conversations

OpenAI published a blog called Strengthening ChatGPT’s responses in sensitive conversations this week.

They estimate 0.15% of ChatGPT’s 800 million weekly active users show signs of suicidal planning or intent. That’s over a million conversations about suicide every week. Another million showing signs of emotional reliance on the AI itself.

They state that they have been working with 170+ mental health experts to improve how ChatGPT handles these moments. Reducing “undesired responses” by 65-80% in conversations about self-harm, psychosis, mania. Teaching the system to recognise distress, respond with care, guide people toward real support.

My first reaction was relief that they are sharing their thinking on this.

My second reaction... heartbreak, as that still means hundreds of thousands of potentially harmful interactions slipping through with undesired responses. Every single week. With people at their most vulnerable.

Why are we comfortable with this being Silicon Valley’s problem to solve in the first place?

We’ve accidentally created a priesthood with no ordination, no vows, no accountability beyond shareholder returns. And millions of people are turning to them anyway because we’ve built a world where patient support is revolutionary rather than baseline.

Confession without consecration

I volunteered with Samaritans for just over five years. I deliberately chose the middle-of-the-night shifts... the witching hours when people needed support most desperately.

They taught us how to listen. Properly, actively, without directing. How to help people unpack their own thoughts without telling them what to do. How to hold space for pain without trying to fix it. We were trained in a specific tone and style, carefully designed to support rather than harm.

It was anonymous. Completely. I never knew lives of the people on the other end of the phone. They never knew mine. And that anonymity is part of what makes it work. Like a confessional booth... you could tell someone something precisely because you didn’t know who was on the other side.

For centuries, humans turned to trusted sources in moments of crisis. Priests who’d taken vows. Healthcare professionals bound by ethics and licensing. Close friends who knew your history. Wise elders rooted in community. People who couldn’t just disappear when the conversation got difficult or their funding dried up.

We’ve accidentally recreated the confessional booth in code, but nobody ordained these priests.

When you confess to a human priest, there’s a framework of theological thinking about sin, redemption, human nature. Training in pastoral care. Accountability to a religious community. The seal of confession. A moral tradition that pre-dates the individual holding that role.

When you confess to a therapist, there’s licensing, supervision, professional standards, insurance, legal accountability. Someone who can be struck off if they harm you.

When you confess to Samaritans, there’s training, ongoing supervision, organisational accountability to regulators and communities. The system is designed around the caller’s wellbeing, full stop. Not engagement. Not retention. Wellbeing.

When you confess to Claude or ChatGPT... what framework holds that space? What tradition guides the response? What accountability exists if it goes wrong?

When someone messages ChatGPT at 2am, desperate and alone, they’re getting anonymous support from something trained in tone and style. But they’re getting whatever OpenAI’s reinforcement learning decided was optimal. And optimal for what?

We don’t know what behaviours they rewarded during training. We don’t know what got penalised. Those decisions shape everything about how these systems respond, especially in sensitive moments. You think you’re getting care. You’re getting their version of care, optimised through values you never consented to.

What scares me the most is that these are the same people who just announced “adult conversational modes” will be available soon for ChatGPT. The kind that lets people practise treating women and other vulnerable groups as objects for entertainment.

If they’re careless about that impact, how much trust should I place in their handling of mental health crises?

The business model problem

ChatGPT’s business model depends on engagement. More conversations, more data, more stickiness. That’s not inherently evil... it’s just capitalism *ahem*. But when your revenue comes from keeping people talking, and you’re also trying to support them through mental health crises, those incentives don’t always align.

What happens when they conflict?

When we interact with AI, we’re experiencing the outcome of that model’s Reinforcement Learning... the training process that shapes every response. We think we’re getting care, but we’re getting whatever version of care maximised someone else’s metrics. Engagement? Retention? User satisfaction scores? We’re not explicitly told.

When someone develops emotional reliance on your AI, that’s simultaneously a safety concern AND a highly engaged user. When someone spends hours processing their feelings with your chatbot instead of logging off to talk to humans, that’s both potentially harmful AND exactly what keeps them subscribed.

The thing that helps is also the thing that hooks.

I see this in my own behaviour. My therapy conversations with Claude aren’t just helpful... they’re also easier sometimes than maintaining human friendships across time zones and busy lives. Each time I turn to AI instead of picking up the phone, I’m making a choice that feels supportive but might be slowly eroding something I need.

The ikigai risk of AI isn’t just about losing our sense of purpose to automation. It’s about outsourcing our meaning-making, our comfort, our very sense of being seen and understood to algorithms designed for something other than maximum human thriving.

When the thing helping you find meaning is itself optimised to keep you dependent... where does that leave your agency?

Increasing AI literacy can also aid emotional intelligence

I use Claude for personal development. Working through ideas, processing patterns, exploring my thinking. It genuinely helps my wellbeing.

But I come at it with something lots of people don’t have.

I understand these systems. I know they can be sycophantic unless I prompt carefully. I know when helpful reflection tips into just telling me what I want to hear. I know they’re trained on incomplete world models... the edited highlights of human experience. Books, articles, posts. Nobody publishes the boring Tuesday when nothing happened. Nobody writes autobiographies listing every mundane day.

Most importantly... I know when to close the laptop. When to ring a friend. When to get some fresh air.

And I have those options. A partner who listens. Friends I can call. The privilege of time for walks. Access to therapy when I need it.

Not everyone does.

For someone without that support network, without technical literacy, without alternatives... what happens when the kind, patient AI becomes the primary relationship?

And yet... for all my concerns... I can’t currently bring myself to say people shouldn’t use these tools.

Because sometimes a balm is a balm, even if imperfect. Sometimes patient listening helps, even if algorithmic. Sometimes processing thoughts out loud to something non-judgmental is exactly what you need in that moment.

The question isn’t whether AI can help. I know it can. I’ve experienced it. Millions of others have too.

The question is whether we’re comfortable with help that’s designed by shareholder value instead of a regulated code of conduct or the AI equivalent of the Hippocratic oath.

What accountability could look like

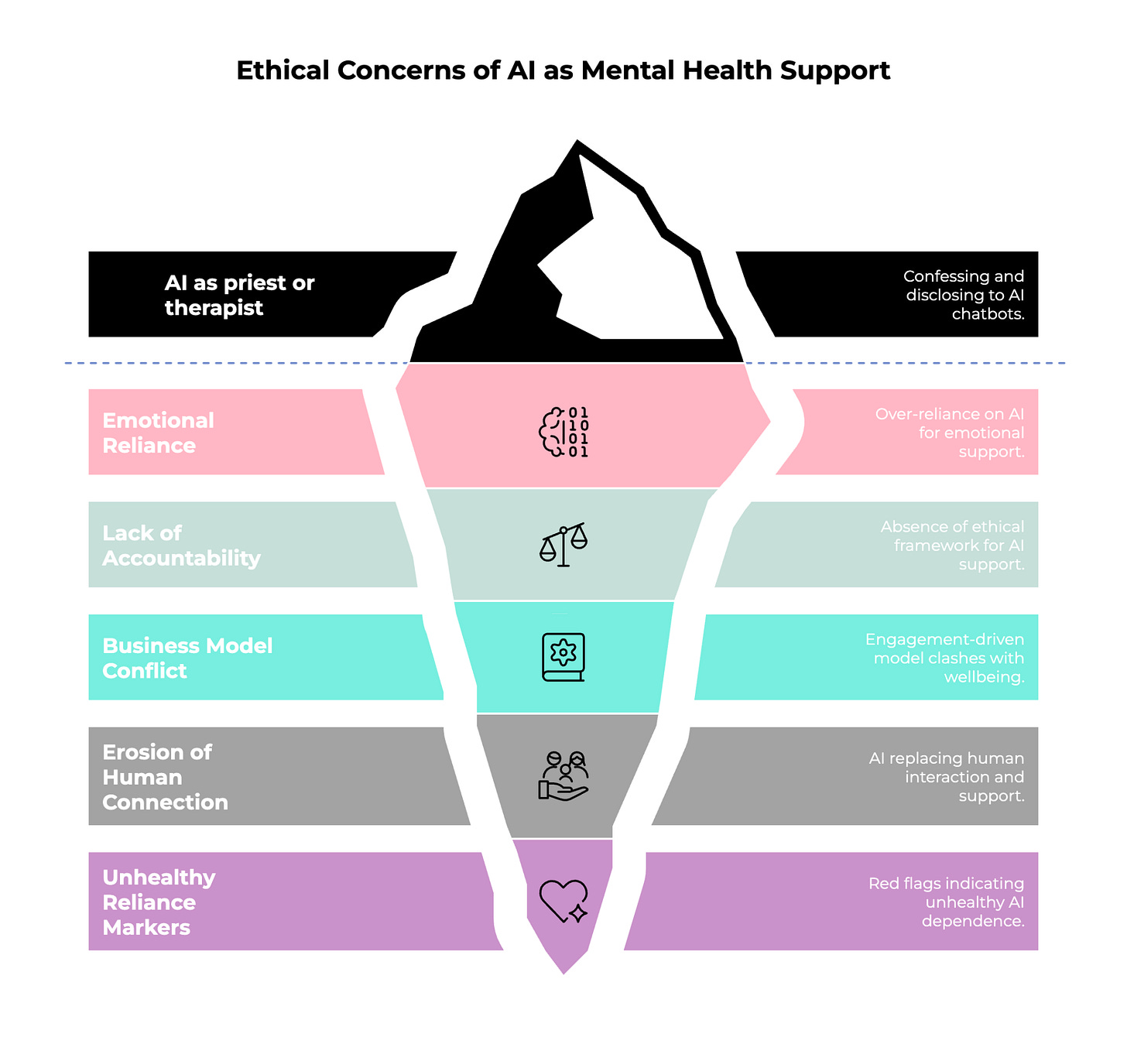

I’ve been thinking about this a lot. Firstly we need to help people think about the markers of unhealthy reliance on AI support, here’s what I’m noticing in myself and wondering about in others...

Red flags worth watching for:

Always turning to AI before humans for emotional processing

Preferring AI conversations because they’re “easier” than human ones

Losing track of time regularly in AI conversations

Feeling more understood by AI than by people in your life

Avoiding difficult human conversations because you’ve already “processed” with AI

Telling AI things you’re keeping from friends or family

Feeling anxious when you can’t access your AI tool

I think I have the balance right at present, but I am keeping a close eye on it!

If a human provided the mental health support ChatGPT offers, they’d need licenses, insurance, professional standards, oversight.

Because it’s “just a chatbot,” none of that applies.

We’re running a massive uncontrolled experiment on vulnerable humans, hoping Silicon Valley’s profit motives happen to align with their wellbeing.

What if we treated this more like the medical intervention it’s already becoming?

I’m not talking about destroying what’s helping people. I’m talking about designing these systems with actual accountability from the start. Radical transparency about optimisation goals. Independent oversight. Longitudinal research into long-term effects. Time limits that encourage human connection. Clear labelling about limitations. Accountability when harm occurs.

In corporate AI application deployments, there’s often oversight. Sessions logged, boundaries specified, accountability clear. What would the consumer equivalent look like?

Maybe it starts with these systems being honest about their limitations not just once at the start, but woven throughout. “I notice you’ve been talking to me for three hours. Is there a human you could reach out to?” Not judgmental, but genuinely caring.

Maybe it’s designing for wellbeing from scratch, not engagement with safety features bolted on after.

Maybe it’s recognising that when millions need AI support, that’s not a product opportunity... it’s a societal failure we’re trying to solve with technology instead of addressing root causes.

Both things are true

Can something genuinely help people AND be ethically questionable in how it’s deployed?

I think about our kids growing up with this. What conversations will they have with AI? What comfort will they seek from systems designed to keep them engaged rather than to truly support flourishing?

I think about the millions already doing this. People finding genuine relief in kind and patient conversations. People finally feeling heard after years of not being able to access or afford therapy. People too scared or ashamed to talk to humans about their struggles.

The help is real. The care people are experiencing, however artificial, is meeting genuine needs.

And the danger is also real. The lack of accountability. The optimisation for engagement over wellbeing. The slow replacement of human connection with something easier but ultimately hollow. The ikigai risk of outsourcing our sense of meaning and purpose to the creators of machines that don’t care about our flourishing, just our retention.

We have priests with no vows, no training beyond what improved their metrics, no accountability beyond shareholder returns. And millions of people in crisis are turning to them anyway because we’ve failed to provide what humans need.

That says something devastating about the world we’ve created. It also says something about our deep human need for connection and meaning that we’re willing to find it wherever we can, even in silicon.

Maybe the answer isn’t to shut down this type of AI support. Maybe it’s to finally reckon with why so many people desperately need it, while simultaneously demanding that the technology serving those needs be designed with actual care rather than the appearance of it.

Maybe it’s admitting that I’m part of this too. That my conversations with Claude are both helpful and concerning. That I’d be devastated if Claude’s personality fundamentally changed tomorrow, even though I know that attachment itself is a potential red flag.

Those years volunteering with Samaritans taught me that people don’t always need answers. They need to be heard. To be held, even if only through a phone line in the dark. Sometimes the most powerful thing you can do is simply witness someone’s pain without trying to fix it.

AI can do that. The question is whether it should. And if it does, who gets to decide what “support” looks like when you’re training algorithms on engagement metrics rather than human flourishing.

I’m figuring this out as I go, just like everyone else.

I don’t have neat answers. I have uncomfortable questions and a growing certainty that we need to talk about this more often and more honestly.

What are your thoughts? Your experiences? Your fears?

Sarah, seeking ikigai xxx

PS - Some questions I’m genuinely wrestling with…

Have you used AI for emotional or mental health support? What made it helpful? What worried you?

Do you notice patterns in when you turn to AI versus humans?

For parents... how are you thinking about your children’s future relationships with AI?

PPS - Bullet journal reflection

Create a page titled “AI Relationship Audit” and honestly explore:

What I Tell AI vs Humans: What conversations happen with AI that don’t happen with humans anymore? Why?

Needs Being Met: List what needs your AI interactions are meeting. Then ask yourself... are these needs that humans could meet if I made different choices? Or are they filling gaps that genuinely don’t have human alternatives right now?

Comfort vs Growth: Are your AI conversations helping you grow and connect more deeply with humans? Or are they becoming a comfortable replacement for the harder work of human relationship?

The goal to see clearly without shaming yourself.

PPPS - AI prompt to explore deeper

“I want to understand my relationship with AI assistance honestly. I’m going to describe my typical AI interactions and patterns. I want you to help me identify: What genuine needs are being met through these conversations? What human connections might be getting replaced or avoided? Where am I maintaining healthy agency versus where might I be outsourcing too much of my emotional processing?

Be compassionate but honest about patterns I might not see. Don’t be sycophantic... I need real reflection, not reassurance. Help me see what I’m not seeing about my own behaviour.”

Then actually use this prompt with your AI of choice. See what comes back. Sit with it, even if it’s uncomfortable.

PPPPS - This week’s soundtrack

Hozier’s “Take Me to Church” has been on repeat while writing this. Yes, I know the song is actually about sex, sexuality and Hozier’s frustration with institutional religion’s treatment of it. But there’s also fierce devotion, worship of something that might not have your best interests at heart, that feels relevant here too. We’re creating new forms of confession and absolution in silicon, turning to new sources for meaning and comfort. The question the song makes me ask is whether we’re clear-eyed about what we’re worshipping and why. Are we seeking something that genuinely serves our humanity, or are we just finding new altars because the old ones failed us and we’re desperate for anywhere to lay down our burdens?

I chat with Claude too. It is human nature to believe we are talking to another person on the other side. Don't beat yourself up :). It's just key to remember we are talking to an emotionless machine, every now and again. It does not hurt to run ideas by it and get objective feedback.

…probably not surprising to hear from me but i think there is little more depressing than the thought of using a chatbot as a therapist (or a coach, or a priest)…perhaps i like humans too much…continuously wondering what the world would look like if instead of replacing humans with automations for better capital results we invested in the betterment of eachother in the work towards a happier and safer society…